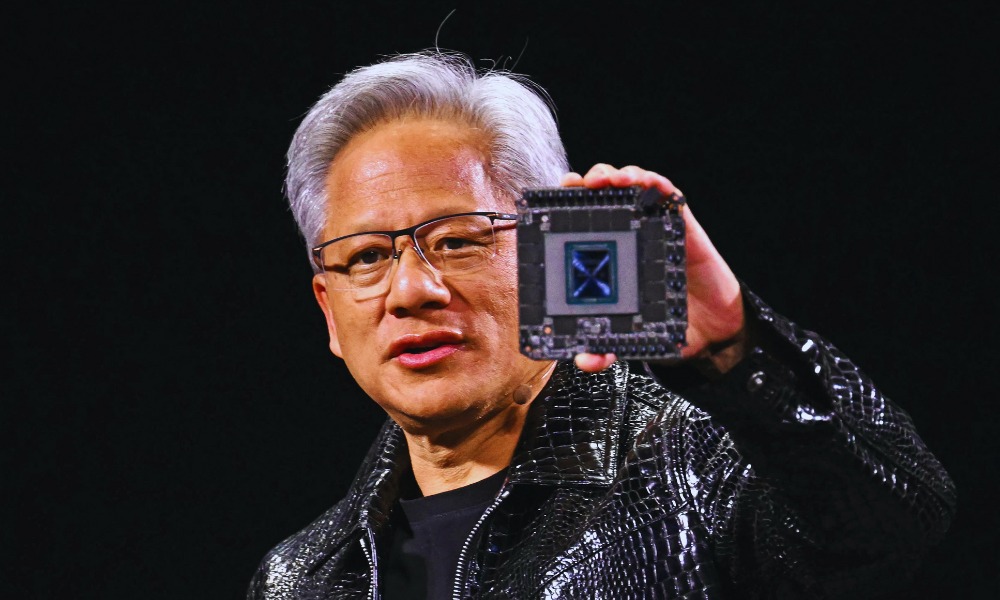

Nvidia CEO Jensen Huang Praises DeepSeek’s R1 as First Open-Source Reasoning AI Model

In an interview with CNBC’s Jim Cramer at Nvidia’s annual GTC conference, CEO Jensen Huang discussed the significance of Chinese startup DeepSeek’s latest artificial intelligence model.

Huang highlighted that DeepSeek’s R1 model will require far more computational power than many experts had anticipated.

Huang described the R1 model as “fantastic” and groundbreaking because it is “the first open-sourced reasoning model.” He explained that the model can break down problems step-by-step, generate multiple answers, and even verify the accuracy of its responses.

“This reasoning AI consumes 100 times more compute than a non-reasoning AI,” Huang stated. “It was exactly the opposite, it was the exact opposite conclusion that everybody had.”

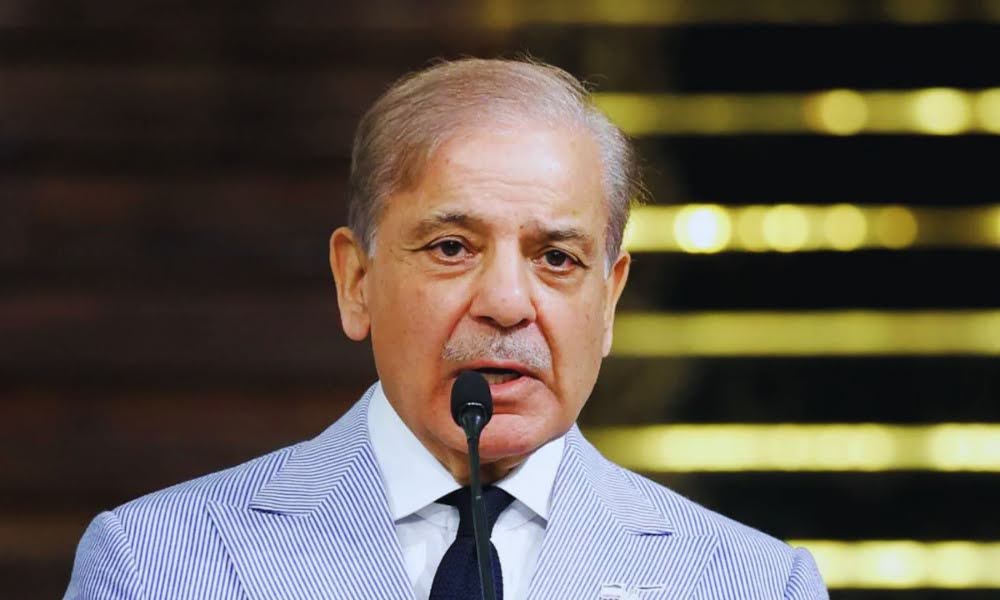

Market Impact of DeepSeek’s AI Model

The launch of DeepSeek’s model in January triggered a significant market reaction. Fears that the model could rival top AI models while using less energy and money led to a sharp sell-off in AI stocks.

Nvidia’s stock fell 17% in a single session, erasing nearly $600 billion in market value — the largest one-day loss ever recorded for a U.S. company.

Despite the market turbulence, Huang remains confident in Nvidia’s position. He emphasized that AI’s growing influence will drive demand for more advanced computing infrastructure, positioning Nvidia for long-term growth.

Nvidia’s New AI Infrastructure and Partnerships

During the conference, Huang also highlighted Nvidia’s latest advancements in AI infrastructure for robotics and enterprise applications.

He announced new partnerships with major companies, including Dell, HPE, Accenture, ServiceNow, and CrowdStrike.

Huang reflected on how the AI landscape is shifting from a focus on generative AI to reasoning models.

He projected that global computing capital expenditures could reach $1 trillion by the end of the decade, with the majority of that investment directed toward AI development.

“So, our opportunity as a percentage of a trillion dollars by the end of this decade is, is quite large,” Huang said. “We’ve got a lot of infrastructure to build.”

Upcoming Nvidia Product Launches

During the keynote session at the GPU Technology Conference (GTC), Huang announced several major product releases. The most notable are Nvidia’s next-generation graphics architectures, Blackwell Ultra and Vera Rubin.

According to a Citi analyst’s Thursday report, the new Rubin GPU will include 288GB of HBM4 DRAM — a 50% increase over the current Blackwell generation.

Citi analyst Christopher Danely views this as a positive development for memory chipmaker Micron Technology, which is expected to benefit from higher margins on HBM products.

“The new Rubin chip will contain 288GB of HBM4 DRAM, 50% more content compared to the current Blackwell generation,” Danely noted. “We believe this is good news for Micron as we believe Micron’s HBM gross margins is roughly 70%.”

TrendForce, a research firm, recently adjusted its Q2 2025 DRAM price forecast from a flat 0% quarter-over-quarter change to an increase of 3%–8%.

Danely sees this as further support for Micron’s improved pricing outlook starting in the second quarter of 2025.

Co-Packaged Optics Technology and Market Impact

Nvidia also revealed its new co-packaged optics (CPO) technology, a next-generation networking solution. However, Danely does not expect this to impact Broadcom, a leader in the networking sector.

Broadcom had already introduced similar technology in March 2024, and Nvidia’s CPO is not expected to be available until the second half of 2026.

Furthermore, Broadcom is likely to benefit from the growing trend of hyperscalers developing their custom accelerator chips to reduce costs and dependence on Nvidia.

Danely predicts that Broadcom’s custom ASICs, which are more cost-effective for specific applications, could capture approximately 25% of the $380 billion AI accelerator total addressable market (TAM) by 2028. GPUs are expected to account for the remaining 75%.

Impact on Traditional Server Providers

Nvidia’s advances in the data center sector could create challenges for traditional server manufacturers such as Intel and Advanced Micro Devices (AMD).

“Nvidia stated that enterprises will need to update their hardware, and they are 50% of the world’s data center capex,” Danely noted.

“We continue to believe that more of the data center market will shift towards Nvidia and accelerated computing. We believe this is a mild negative for traditional server providers such as AMD and INTC.”

Future AI Landscape

Huang’s insights underscore the transformative impact of AI on the tech industry.

DeepSeek’s reasoning AI model represents a shift in how AI models operate, demanding greater computational power but offering more sophisticated problem-solving capabilities.

Nvidia’s continued investment in AI infrastructure, partnerships, and product innovation positions the company to capitalize on the growing demand for AI-driven solutions.

Despite market volatility and increased competition, Nvidia’s strategic focus on AI advancements signals long-term growth potential in an increasingly AI-driven world.